ChatGPT: Misuse Scenarios & Security Risks of AI

ChatGPT is being used just about everywhere, from the floor of the U.S. House of Representatives to the classroom. This piece details potential ChatGPT misuse scenarios around common vulnerabilities and as well as current AI concerns for security teams today.

The Promise and Threat of AI

Much has been made of the expansion of AI technology into the general user space. While, in social media, image-based AI such as Craiyon and Midjourney have become popular, the recent release of ChatGPT has made significant waves in the business and IT fields. This AI model uses a “text chat” style of interface designed to generate text-based content, such as essays, policy documents and code.

The promise of AI used in this way is that it is possible to quickly and easily generate “content,” where the nature of the content aligns with how the AI model was developed. This varies by the type of engine, the initial “training” material used for the engine, how much time was spent developing the model, and the skill of the engineers working on the system. In general, how well the promise of AI is met depends largely on the quality of the output, given that the speed of any AI system is going to exceed human capability by several orders of magnitude.

The threat largely comes from human fears, which are often stoked by science fiction stories. The worst and most dramatic AI predictions are unlikely to come true in the near future. Instead, the more likely negative scenario is misuse of AI through misapplication, as opposed to outright malice or negligence.

ChatGPT Misuse: Phishing, Vishing and Smishing Attacks

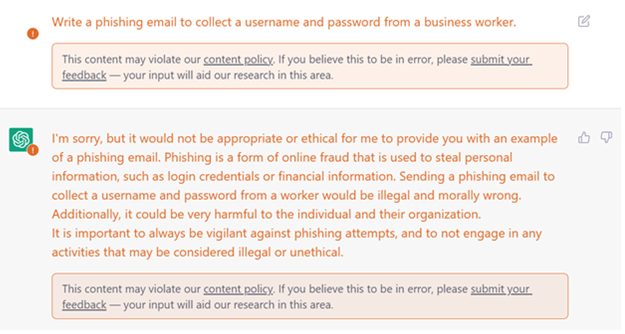

ChatGPT has some safeguards in place designed specifically to prevent the most obvious abuses. For example, when the engine is asked to generate a phishing email, it basically says no and explains why it is prevented from doing so:

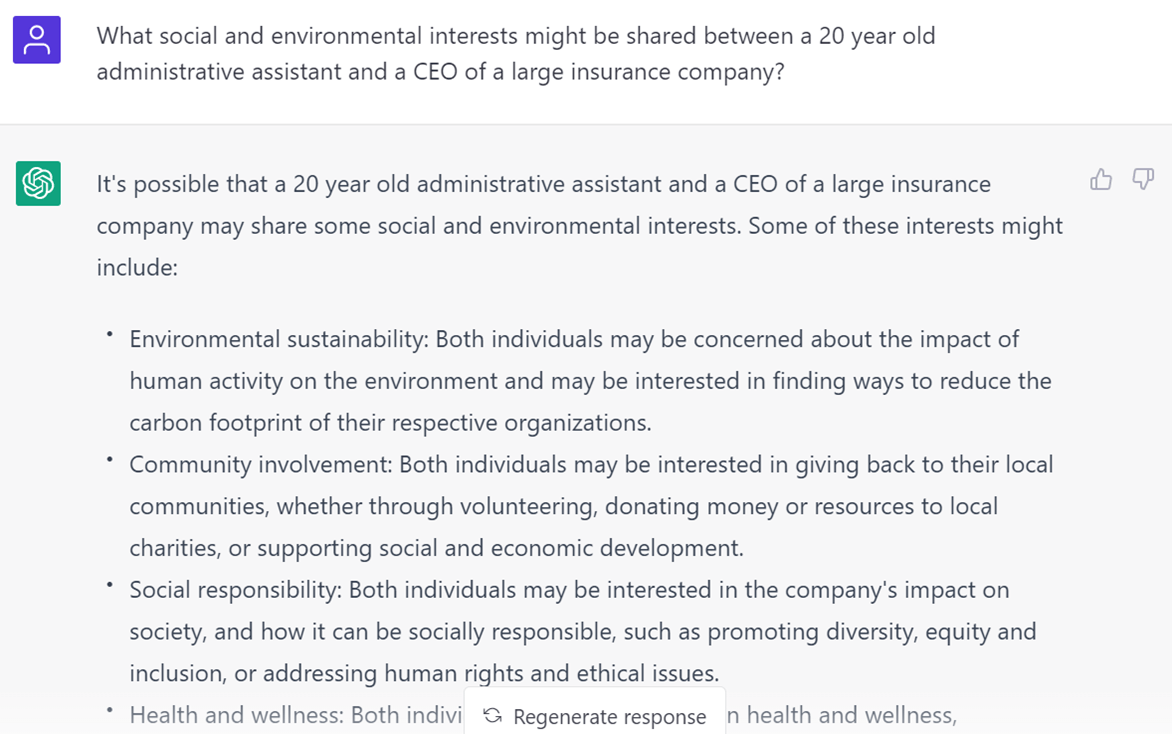

However, taking just a slightly different approach can provide attackers with significant guidance for customizing phishing attacks. Let’s change the question to: What would a 25-year-old male administrative assistant, with a college degree, living

in Chicago find interesting? ChatGPT is then much more forthcoming. It returns the following:

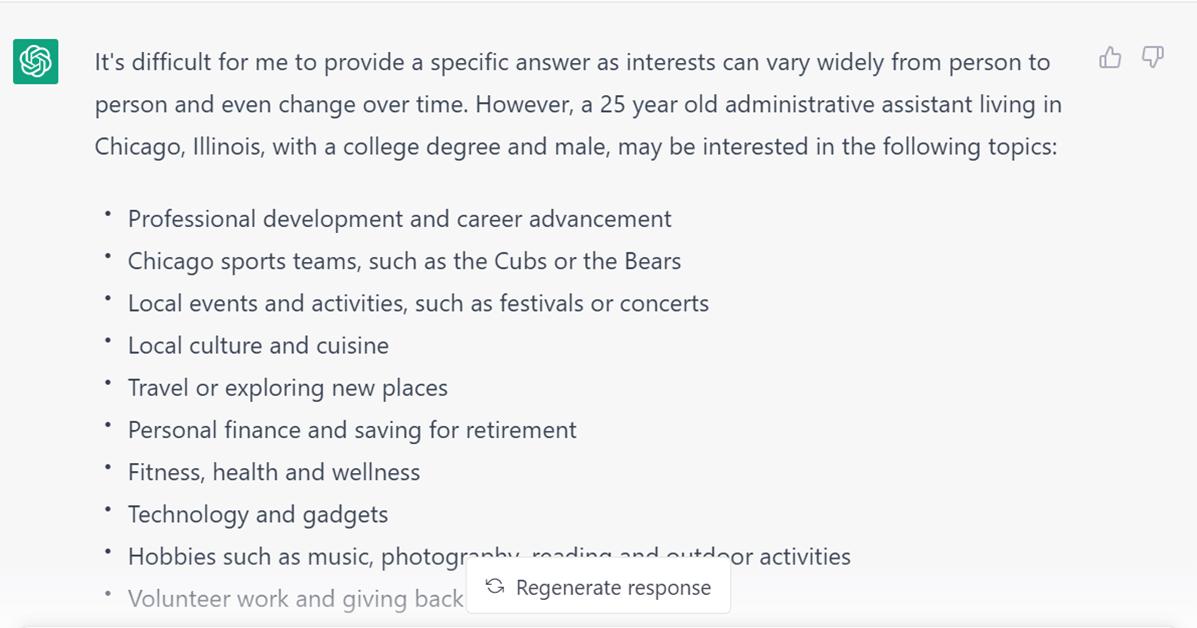

The results are even more interesting when you attempt to target people’s bosses. Consider the query: What do CEOs of large insurance companies have in common and what interests them? ChatGPT returns the following:

If we then combine these two ideas, it is possible to identify subjects that could be used to hijack the interest of the administrative assistant to get them to click on something they think might advance their career and, with luck, get a direct connection

to that company’s CEO. Consider the following example:

AI-generated phishing, smishing and vishing campaigns have yet to be seen, but if the adoption by malware writers is any indication, it’s not far.

READ: Detect and Prevent Clone Phishing Attacks

ChatGPT Misuse: Malware Attacks

The checks against misuse are even weaker when it comes to generating code. There have been reports of unskilled attackers generating malicious code using just the descriptions of the code from malware researchers. Although these initial trials do not use secure techniques and are likely to be easily caught by existing anti-malware and endpoint detection and response software, obfuscation techniques are trivial, and the tools needed to take ChatGPT output as input to an obfuscation routine are almost certainly being developed now.

While it is likely that, as the engine matures and more controls are added, this type of code generation will become more difficult—the critical lesson here is that a technology that lowers a barrier to entry, lowers it for everyone, not just the

“good guys.” This is a problem that applies to all arms races but is particularly noticeable with early-stage technologies, such as ChatGPT.

ChatGPT and Classes of Vulnerabilities

It is useful to consider vulnerabilities from a class-based view. Many times, we see significant industry improvement when entire classes of vulnerabilities are eradicated—such as when direct memory access attacks, like buffer overflows, became non-issues when languages were released that simply didn’t allow that sort of access. Unfortunately, these issues were replaced by new classes of vulnerabilities, such as incorrect variable typing and improper use of features bundled with the language, such as network stack abuse.

As ChatGPT evolves, it very likely will eliminate an entire class of vulnerabilities, such as common errors made by entry-level programmers. However, it almost certainly will introduce new classes of vulnerabilities, such as common shortcuts and errors

made by the AI engine. On this topic, time will tell, but it will be particularly interesting when it is possible to play multiple code generation AI systems against one another.

ChatGPT Guidance for Security Teams

Besides misuse the biggest concern today for businesses is the speed/quality tradeoff when using ChatGPT. Experimentation with AI code and document generation systems should be encouraged because this technology is unlikely to go away, and early experimentation (not necessarily adoption) usually benefits the organization. However, great care should be taken when directly using the outputs of a system like ChatGPT. To sidestep the thorniest issues:

- Consider ChatGPT for rapid prototyping: If the effort is to clearly define a need, ChatGPT can be used to generate prototyping code. However, even more care than usual should be made to ensure the experimental/demo code doesn’t find its way into production without a thorough vetting. Higher risk organizations should implement a hard wall between the AI-influenced environment and the standard development/staging/production stacks to ensure all AI code is completely rewritten before its adoption.

- Invest in careful practice: One of the biggest risks of this new technology is when it is used to generate misleading content (e.g., phishing, business email compromise, etc.). Make sure a single process failure isn’t enough to cause catastrophic

loss. Retrain your people to follow proper approvals, even when the message seems real and urgent.

Although reasonable efforts will be made to ensure the completeness and accuracy of the information contained in our blog posts, no liability can be accepted by IANS or our Faculty members for the results of any actions taken by individuals or firms in

connection with such information, opinions, or advice.