Tips to Accelerate the Secure Adoption of Agentic AI

Agentic AI—autonomous systems capable of independently performing tasks and making decisions—offers the potential for major operational efficiencies but also introduces complex security and compliance risks. For CISOs, navigating this landscape requires proactive implementation of strong governance, identity management, and operational controls.

READ MORE: How to Effectively Use AI

What are the Critical Agentic AI Concepts?

Agentic AI represents a significant advancement from generative AI by autonomously executing tasks rather than simply responding to explicit human instructions. The term “agentic” emphasizes the active and independent nature of AI systems that can make and execute decisions autonomously. This autonomy introduces unique complexities requiring security leaders to understand foundational concepts to effectively manage risks while also harnessing potential benefits for the organization:

- Agent: In context, an agent is a system or software capable of performing actions independently to achieve specific goals without continuous direct human intervention. Agents autonomously perceive their environment, reason about appropriate actions and execute decisions based on predefined objectives or learned strategies. The agentic nature refers specifically to the capacity to take proactive or independent actions. Properly governed agents can significantly streamline operations but, if unmanaged, can introduce critical security vulnerabilities.

- Examples: Virtual assistants autonomously scheduling meetings, cybersecurity agents independently monitoring and responding to threats, or customer service chatbots autonomously resolving support issues.

- Guardrails: Guardrails are policies, rules and technical controls designed to constrain agentic AI behaviors within safe, ethical and regulatory-compliant boundaries. These guardrails help security teams ensure AI systems behave predictably and responsibly, mitigating potential operational and compliance risks. Frameworks such as the NIST AI Risk Management Framework or ISO 42001 standards often support their development.

- Example: Limiting autonomous financial decisions without human oversight or enforcing access restrictions preventing AI assistants from handling sensitive or confidential data.

- Nonhuman identities: NHIs are digital identities explicitly assigned to autonomous AI agents, distinct from those of human users. NHIs ensure actions performed by AI agents are traceable and accountable and are critical for auditability and security compliance. Mismanagement of NHIs can lead to excessive privileges and security breaches.

- Example: Dedicated service accounts for cybersecurity agents performing automated threat detection or specialized credentials assigned to AI-driven automation processes interacting with privileged systems.

DOWNLOAD THE COMPLETE GUIDE: Accelerate Secure Adoption of Agentic AI

Transitioning from Data Security to Knowledge Security

Security strategies have historically protected data at rest and in transit, focusing on preventing unauthorized access. Agentic AI fundamentally transforms this approach by generating and acting on new knowledge derived from data. CISOs must now evolve security practices to manage risks across the entire knowledge lifecycle, from data creation through AI processing to knowledge retention and deletion. This broader view requires rethinking data classification, privacy controls and knowledge management practices at every stage:

- Data creation: Initial inputs gathered from users, customers and third parties.

- Data storage: Managing structured and unstructured data securely in cloud or internal systems.

- AI processing: Using techniques such as embedding, retrieval-augmented generation/model context protocol (RAG/MCP) and fine-tuning to transform data into insights.

- Knowledge generation: Producing summaries, forecasts and recommendations based on AI interaction with data.

- Knowledge storage and sharing: Capturing generated insights within wikis, dashboards, chats and agent memory. Applying data classification labels and sensitivity is necessary once knowledge is created, just like data.

- Knowledge and data deletion: Implementing retention policies, knowledge deletions and model drift to maintain compliance and privacy.

This evolution necessitates governance that addresses not just data handling, but the broader management of AI-generated knowledge.

READ MORE: CISO Challenges in Data Security: Best Practices for Privacy Compliance

When to Adopt Agentic AI?

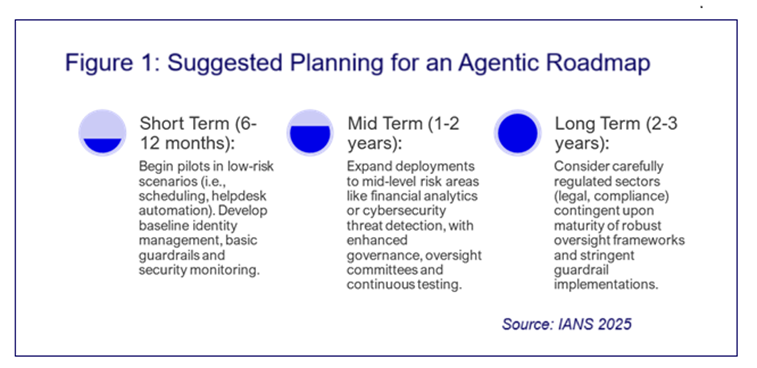

Agentic AI adoption is expected to occur swiftly over the next one to three years, especially within organizations already using AI-powered productivity tools like Microsoft 365 Copilot. Initial deployments typically focus on relatively low-risk tasks such as scheduling, internal information retrieval and customer service support.

Broader business critical functions such as cybersecurity, financial analysis and HR automation, will likely adopt these systems by 2026, driven by demonstrated productivity gains and competitive pressure. However, sectors involving significant legal, ethical or compliance risks (i.e., healthcare, legal services, financial management) will likely see slower adoption rates until governance and security practices become fully mature.

Organizations that proactively establish strong governance frameworks and comprehensive security controls will be best positioned to capitalize on agentic AI safely (see Figure 1).

DOWNLOAD MORE: Data Protection and Classification Policy Template

Security Implications to Consider

Transitioning from passive to autonomous AI fundamentally shifts the security landscape, introducing a range of complex challenges that require careful, long-term planning. Organizations must pace themselves thoughtfully, recognizing that implementing secure agentic AI is a gradual process rather than an immediate overhaul. Key security priorities include establishing robust identity and access management for nonhuman digital identities, ensuring transparency to mitigate AI drift and bias, and guarding against malicious manipulation such as prompt injection attacks.

To avoid shadow AI deployments and unauthorized use, clear governance frameworks must be put in place, alongside least-privilege policies and detailed audit logging. Rigorous oversight, including regular impact assessments, well-defined human intervention protocols, and strict usage policies, is essential for maintaining control and compliance. Continuous monitoring, adversarial testing, and red teaming further reinforce defenses, ensuring that agentic AI is deployed securely and responsibly.

Taking the Next Steps to Secure Agentic AI Adoption

Securely adopting agentic AI requires a proactive, coordinated approach that balances governance, oversight, and investment in people, process, and technology. Start by forming a cross-functional steering committee to ensure broad alignment across legal, risk, HR, finance, product, and operations. Task your IAM team with managing digital identities for nonhuman agents, and embed regular security reviews—including quarterly penetration tests and bi-monthly adversarial testing—into your operations. Think of your organization like a bowling alley: your people roll the ball, guided by well-defined processes (the rules), and protected by technology acting as bumpers to prevent serious missteps. By establishing clear governance, ongoing testing, and strong education and awareness programs, you’ll be positioned to harness the power of agentic AI while minimizing risk.

Take our CISO Comp and Budget Survey in less than 10 minutes and receive career-defining data and other valuable insights and data sets.

Security staff professionals can take our 2025 Cybersecurity Staff Compensation and Career Benchmark Survey.

Any views or opinions presented in this document are solely those of the Faculty and do not necessarily represent the views and opinions of IANS. Although reasonable efforts will be made to ensure the completeness and accuracy of the information contained in our written reports, no liability can be accepted by IANS or our Faculty members for the results of any actions taken by the client in connection with such information, opinions, or advice.

Although reasonable efforts will be made to ensure the completeness and accuracy of the information contained in our blog posts, no liability can be accepted by IANS or our Faculty members for the results of any actions taken by individuals or firms in connection with such information, opinions, or advice.

.png?sfvrsn=e4b46d1f_2)